A couple weeks ago, a reader notified Your JoeDog of a problem with siege’s cookie handling. When the server sets a cookie that’s already stored, siege won’t update its expiration time. A JoeDog Fellow told him, “That’s a paddlin’.” The last cookie in, is the next cookie out.

A couple weeks ago, a reader notified Your JoeDog of a problem with siege’s cookie handling. When the server sets a cookie that’s already stored, siege won’t update its expiration time. A JoeDog Fellow told him, “That’s a paddlin’.” The last cookie in, is the next cookie out.

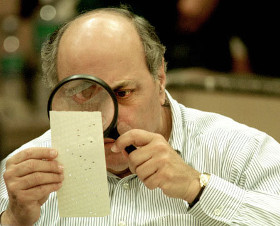

The investigation took Your JoeDog into cookie.c, a file he hasn’t touched in years. “This code is awful,” he said. “Who wrote this shit? … Hmm, some guy named Jeff Fulmer, et al. If I ever meet that guy, I’ll tell him a thing or two.”

As an ethnic German with a tendency to over engineer — well, um — everything, Your JoeDog decided to completely overhaul cookie handling. Why change two lines when you can rewrite everything, amirite? The file cookie.c now contains a COOKIE object with getters and setters, cookies.c is a cookie list object which houses all cookies in memory. Cookies will persist in $HOME/.cookies but the details are still being considered.

Here’s the thing. Siege isn’t a browser, it’s many browsers. We store cookies at the thread level. Each thread gets its own list of cookies. To distinguish them, we used pthread_self(), which is a long long int like 140018384393984 or something. Technically it’s not a long long int, it’s a pthread_t, but you get the point. The next time you fire up siege, its threads will have different IDs so we need a convenient way to associate stored cookies with new thread IDs.

Furthermore, there’s no guarantee that your next run will have the same number of simulated users. We need to consider how to handle that situation. If you have 50 stored cookies and you run 60 new users, should we stop assigning cookies at 50 or do we start repeating them starting with cookie 1 to user 51? Inquiring minds want to know. I want to know.

The new code is not yet in version control. If you want a copy, let us know. We can create a beta branch or send you a tar ball. Happy hacking.

NOTE: Your JoeDog refers to cookie and cookies as objects because they are. Yes, siege is written in C rather than C++ but you can write objects in C just fine thankyouverymuch. An object is simply a thing which references itself. To achieve this in C, you just pass a reference to the object as an argument.